What is Generative AI?

Generative AI meaning is about AI that acts like about creating something new and original. Imagine an artist who can paint, compose music, and write stories all at once. That's what generative AI does, but in the digital world. It uses algorithms to generate new content, whether it's a piece of music, a work of art, or a block of text, that looks or sounds like it was made by a human.

Figure was created in a minute with midjourney application: Left: Future 2050 World art and Right: Creation of character art for a video game.

To make this a bit more tangible, consider a simple example. You've probably seen those apps that turn your photos into artwork in the style of famous painters. This is generative AI at work. It learns from thousands of paintings and then applies that learned style to your photo, creating something new that didn't exist before - a unique piece of art based on your picture.

Evolution and Historical Background

The story of generative AI is like a journey from humble beginnings to an exciting present. In the early days, say around the 1950s and 60s, AI was a fledgling field. Back then, it was more about computers following very specific instructions to perform tasks. They weren't creating anything new; they were just doing what they were told. Fast forward to the 1980s and 90s, and things started to get interesting. This was when the idea of neural networks (think of them as a simplified digital version of the human brain) began to take shape. These networks didn't just follow instructions; they could learn from data and start making their own decisions. But even then, they were like students still learning the ropes, limited by the technology of the time.

The real game-changer came in the 2000s with the advent of deep learning. This is like giving our student a supercomputer brain. Deep learning meant that AI could now learn from massive amounts of data, making connections and understanding patterns far beyond human capabilities. This is when AI started creating things that were genuinely new and original. The most recent chapter in this story, from the 2010s onward, is when things really start to feel like science fiction. We now have AI models like ChatGPT for text and DALL-E for images. These are like our student growing up to be a genius artist and writer. They can generate realistic and complex content that's often hard to distinguish from something a human would create.But with great power comes great responsibility. As generative AI continues to advance, it's also raising important questions about ethics, such as how to prevent misuse and how to respect privacy and authenticity. It's an exciting time for generative AI, but it's also a time for careful consideration of its impact on society. In essence, generative AI has come a long way from being a simple rule-follower to a creator of diverse and intricate outputs. It's like watching a child grow up to be a prodigy, full of potential but also needing guidance and wisdom.

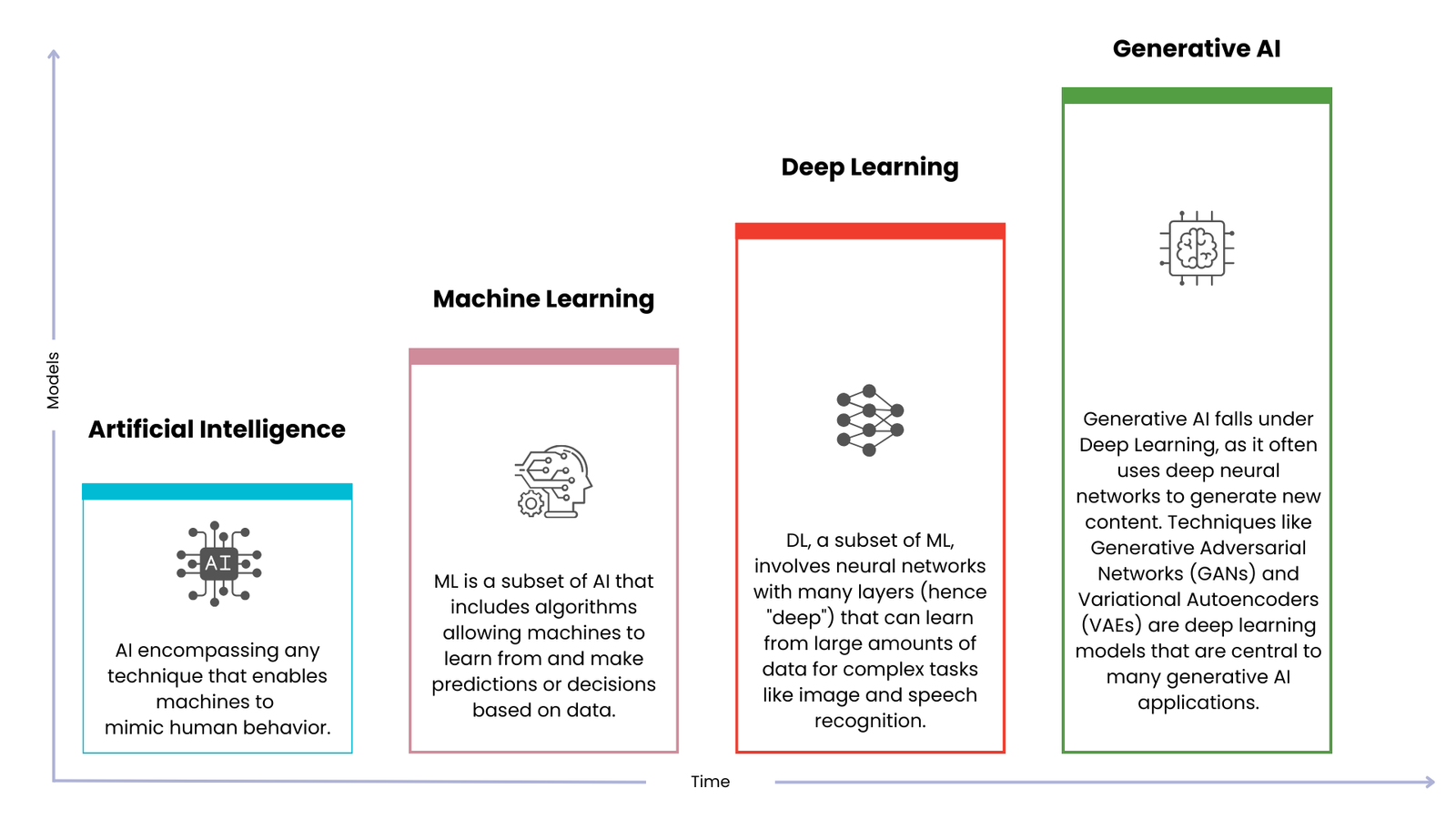

From AI to Generative AI: Understanding the Layers of Intelligent Technology

In today's tech landscape, Artificial Intelligence (AI) is a transformative force, extending from basic algorithms like email filters to advanced neural networks behind voice assistants. As we delve deeper, we find Machine Learning (ML) and Deep Learning (DL), where machines learn from data. At the forefront of this evolution is Generative AI, a field where AI moves beyond data processing to creating original content, marking a shift from task-oriented machines to ones capable of creativity and innovation. Let's explore these layers of AI and their unique roles in shaping our interaction with technology.

Figure Shows the AI to Generative AI Model description

• Artificial Intelligence (AI): AI is like giving a computer a brain that can think, learn, and make decisions similar to humans. It's a wide field that covers everything from simple computer programs that can play chess to complex systems that analyze big data. A simple example of AI is a spam filter in your email. It learns to identify what is spam and what is not, based on patterns and rules.

• Machine Learning (ML) : ML is a part of AI where machines learn from experience. Imagine you're teaching a computer to recognize pictures of cats. You show it thousands of cat pictures, and it starts to understand what a cat looks like. This is ML - the computer is learning from the data.Netflix recommendations are a practical example. Netflix is an example uses ML to learn what types of shows or movies you like and suggests new ones based on what you’ve watched.

• Deep Learning (DL): DL is a more advanced version of ML. It uses something called neural networks, which are designed to imitate how the human brain works. These networks can learn from a vast amount of data and can understand more complex patterns. For example, Voice assistants like Siri or Alexa use deep learning. They can understand your voice commands because they’ve been trained on large datasets of human speech.

• Generative AI: This is a specialized area in DL where the AI doesn’t just learn from data, it creates new content that didn’t exist before. It's like teaching the AI to be an artist or a composer. For example, Deepfakes are an example of Generative AI. They use AI to create realistic video and audio recordings that look like real people saying or doing things they never did.

Generative AI Models: An Integrated Perspective

Generative AI models encompass a range of technologies that enable the creation of new, original content, data, or information based on learned patterns and structures from existing data. These models are pivotal in fields where creativity, data augmentation, and advanced pattern recognition are essential. Here's a consolidated overview of various generative AI models namely Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), Transformer Models, and Hybrid Generative AI Models, along with their workings, advantages, and real-time applications are explained.

1. Generative Adversarial Networks (GANs): GANs function through a dual-network system where the generator creates content and the discriminator evaluates it, leading to highly realistic outputs. Training a GAN is like coaching two teams where one creates art (generator) and the other judges it (discriminator). The art-creating team learns to make more convincing art pieces by using random ideas, while the judging team learns to better differentiate between real and artificial art. Over time, the art-making team becomes so good that their art pieces are almost indistinguishable from real ones. GAN is used in image generation, enhancement, and editing. For instance, creating photorealistic images of people who don't exist or converting sketches to detailed images. Currently, Tata Consultancy Services (TCS) has been exploring the use of GANs for various applications, including enhancing image quality in their IT solutions and creating simulations for training AI models in different scenarios.

2. Variational Autoencoders (VAEs) : VAEs compress data into a latent space and reconstruct it, introducing randomness to generate variations of the original data. Training VAEs involves two steps. First, you have a team that learns to summarize complex data into simpler forms (encoder), and then another team learns to rebuild the original data from this summary (decoder). The training aims to make the rebuilding as accurate as possible while also introducing some creativity in the process. VAE is applied in fields like drug discovery, where they can generate molecular structures. They're also used in anomaly detection and image denoising. Wipro employs Variational Autoencoders (VAEs) to create authentic and top-tier data, capable of replicating real-world situations with precision.

3. Transformer Models: Transformers process sequences of data, focusing on contextual relationships, and are adaptable to various tasks through pre-training and fine-tuning. Transformers are trained in two main stages. First, they learn from a large and diverse set of data, like reading lots of different books (pre-training). Then, they specialize by training on a specific type of data, like focusing on science fiction novels (fine-tuning). This makes them versatile, allowing them to adapt to various tasks. Transformers are widely used in natural language processing for tasks like language translation, content generation, and even in complex decision-making processes. Flipkart an e-commerce leader, Flipkart could utilize Transformer models for product recommendation systems, enhancing customer experience by providing personalized suggestions based on user browsing and purchase history.

4. Hybrid Generative AI Models: Hybrid Models combine elements from different generative models, harnessing their strengths for complex, multifaceted tasks. Training hybrid models involves mixing different training techniques. It's like a combined training regimen for an athlete where they not only run but also swim and cycle, preparing them for a triathlon. The exact training depends on the event they are preparing for (the specific AI task) and their unique strengths (the model's architecture). Hybrid Models is applied in sophisticated tasks that require a combination of skills, like advanced robotics, where a model needs to perceive, understand, and interact with its environment in complex ways. In the food delivery and restaurant aggregator business, Zomato uses some of the hybrid models combining aspects of GANs and Transformers to analyse customer reviews and images to enhance their recommendation systems.

The versatility of these models lies in their ability to not only interpret but create and enhance data, leading to applications across numerous sectors:

• Creative Industries: GANs and VAEs are revolutionizing fields like digital art, music composition, and game design by generating novel and diverse content.

• Healthcare and Pharmaceutical: VAEs contribute significantly to drug discovery and genomics, where they assist in creating molecular structures and predicting genetic patterns.

• Language and Communication: Transformers have transformed natural language processing, enabling sophisticated language translation, content generation, and even automated journalism.

• Robotics and Autonomous Systems: Hybrid models are crucial in developing advanced robotics and autonomous systems, where a blend of perception, cognition, and action is required.

These models are not just expanding the horizons of AI's capabilities but also providing personalized, efficient, and creative solutions across industries. From augmenting data for research to tailoring user experiences in digital platforms, generative AI models are key drivers in the ongoing evolution of artificial intelligence.

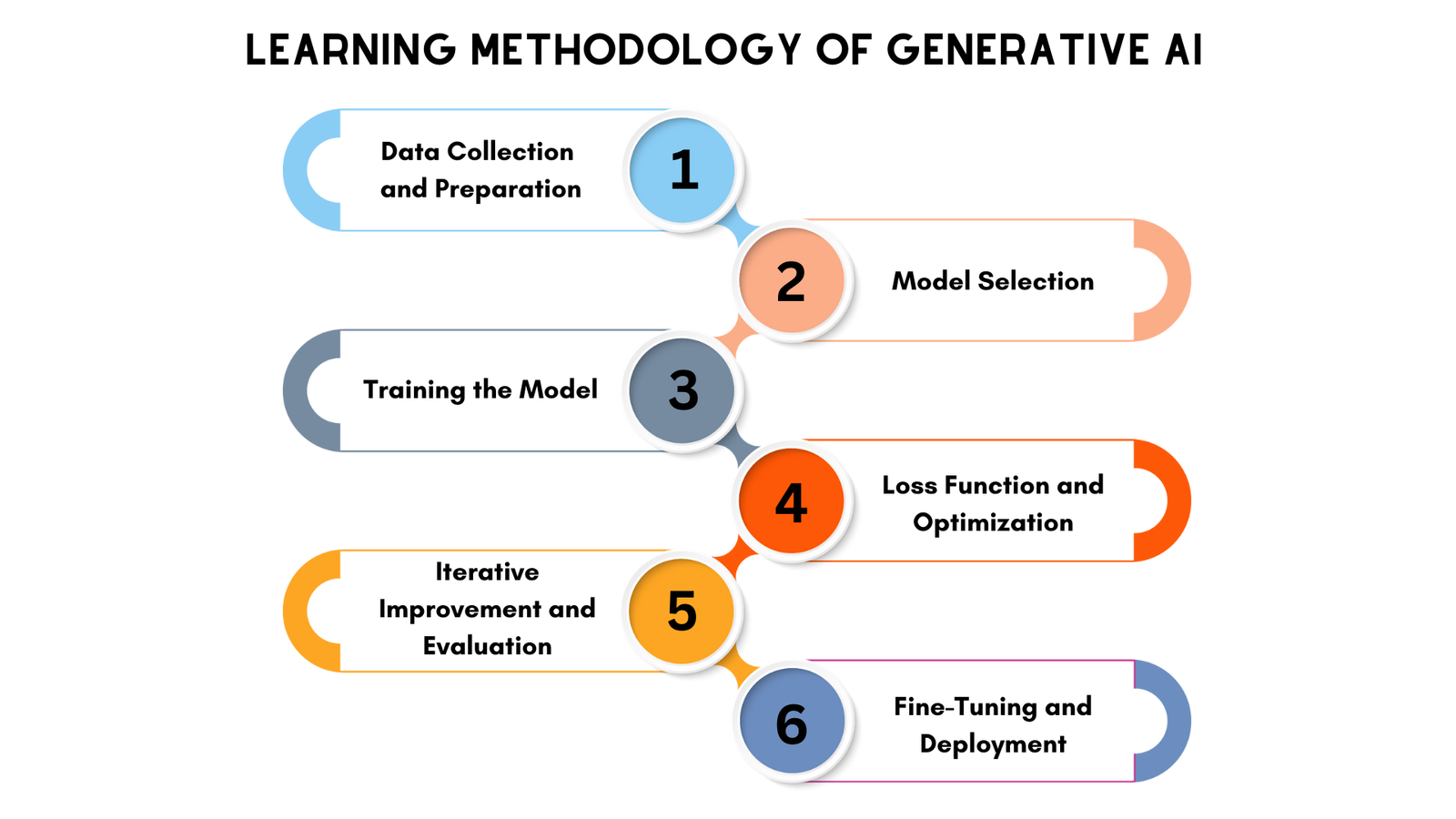

How Generative AI Works ?

This involves teaching the system to understand and then create new, original outputs that mimic the real-world data it was trained on. This process typically involves several key steps:

Figure was 6 steps Learning methodology about How Generative Model Works

1. Data Collection and Preparation

Initial Step: The foundation of Generative AI learning is a vast and varied dataset. The data must be collected and often preprocessed to ensure quality and relevance.

Example: For an AI that generates human faces, thousands of images of faces from different angles, lighting conditions, and expressions are gathered and formatted for training.

2. Model Selection

Choosing the Right Architecture: Depending on the task, different generative models like GANs, VAEs, or Transformers are selected. Each model has its strengths and is suitable for specific types of generation tasks.

Example: GANs are often chosen for tasks requiring high-quality image generation.

3. Training the Model

Learning from Data: The selected model is then trained on the prepared dataset. This involves the model learning to recognize patterns, features, and structures within the data.

For GANs: This involves the iterative process of the generator creating outputs and the discriminator evaluating them, with continuous feedback loops for improvement.

For VAEs: The model learns to encode data into a compressed representation and then decode it back, introducing variability.

For Transformers: The model is pre-trained on a large dataset to understand context and relationships within the data and then fine-tuned for specific tasks.

4. Loss Function and Optimization

Minimizing Error: Generative models use a loss function to measure how far the generated data is from the actual data. The training process involves minimizing this loss.

Example: In GANs, part of the loss function measures how well the discriminator is fooled by the generator, prompting improvements in the generated output.

5. Iterative Improvement and Evaluation

Continuous Learning: The model undergoes numerous iterations of training, where it progressively improves its generation capabilities.

Evaluation: The outputs are evaluated for quality, diversity, and resemblance to real-world data.

6. Fine-Tuning and Deployment

Refining the Model: After the initial training, the model might be fine-tuned with additional data or adjustments to better suit specific applications.

Deployment: The trained model is then deployed in real-world scenarios, where it generates new content or data based on its training.Once deployed, Generative AI models can be adapted and retrained based on user feedback and changing data trends to maintain or improve their performance.

Popular Real world Generative AI Use-Cases

Generative AI has a multitude of real-world applications, significantly impacting various industries and domains. Some of them are:

• Art Creation: Generating new artworks, imitating styles of famous painters or creating entirely novel artistic expressions.

• Game Development: Creating realistic game environments and characters, enhancing the visual and interactive experience.

• Film and Animation: Generating special effects, backgrounds, and even animating characters for movies and animated films.

• Music Composition: Composing new music pieces in various genres, sometimes even in the style of specific composers.

• Fashion Design: Generating new fashion designs and patterns, predicting trends based on past data.

• Advertising and Marketing: Creating personalized content for marketing campaigns, including images and text tailored to specific audiences.

• Drug Discovery: Aiding in the discovery of new molecular structures for drug development, accelerating the research process.

• Content Generation for Social Media: Generating creative content for social media posts, including images and text.

• Text Generation: Writing articles, stories, or even generating code, aiding in content creation and software development.

• Voice Synthesis: Creating realistic voiceovers and synthetic speech for various applications, from virtual assistants to dubbing in films.

• Architectural Design: Assisting in creating architectural designs and visualizations, offering innovative and efficient design solutions.

• Personalized Learning and Education: Generating customized educational content, adapting to individual learning styles and needs.

• Automotive Design: Assisting in generating new car models and design elements, enhancing both aesthetics and functionality.

• Data Augmentation: Enhancing datasets in machine learning, especially for training other AI models, by generating synthetic data.

• Virtual Avatars and Digital Humans: Creating realistic virtual characters for various applications, from customer service bots to virtual influencers.

These applications showcase the vast and diverse potential of generative AI in transforming and enhancing various facets of our professional and personal lives.

Benefits, Limitations and Ethical Concerns of Generative AI

Generative AI stands at the forefront of technological innovation, offering significant benefits such as unparalleled creativity in content generation, enhanced efficiency in various tasks, and personalized solutions across industries. However, it faces limitations like potential quality and authenticity issues, data dependence, and high computational costs. Ethically, generative AI raises critical concerns, including the risks of misinformation through deepfakes, intellectual property disputes, privacy issues, and the potential for perpetuating biases. Balancing these benefits, limitations, and ethical considerations is vital for harnessing the full potential of generative AI responsibly. Let us discuss one by one:

• Benefits of Generative AI:

Generative AI is a groundbreaking innovation that has brought a new dimension of creativity and innovation, allowing for the creation of unique content across various domains such as art, music, text, and product design. It significantly boosts efficiency and productivity by automating and speeding up the process of content generation, which is particularly beneficial in industries where time and resource savings are crucial. In the realm of machine learning, it plays a key role in data augmentation, enhancing datasets which leads to improved model training and performance. Its ability to offer customization and personalization is transformative, providing tailored solutions in marketing, education, and beyond. Generative AI is also an invaluable problem-solving tool, especially in complex fields like drug discovery and material science, where it can generate innovative solutions that might elude human researchers.

• Limitations of Generative AI:

However, generative AI is not without its limitations. One of the primary concerns is the quality and authenticity of AI-generated content. Often, this content may lack the nuanced understanding and emotional depth that human creators bring to their work. Ethical and societal implications are also significant, with issues like misinformation, intellectual property rights violations, and privacy concerns arising from its use. The dependence on data is another limitation, as the quality of the AI's output is directly tied to the quality and quantity of the training data it receives. Computational costs are high, which can be a barrier for smaller organizations or individuals. Furthermore, there is a risk of bias and fairness issues, as AI models can inadvertently perpetuate and amplify biases present in their training data, leading to skewed or unfair outcomes.

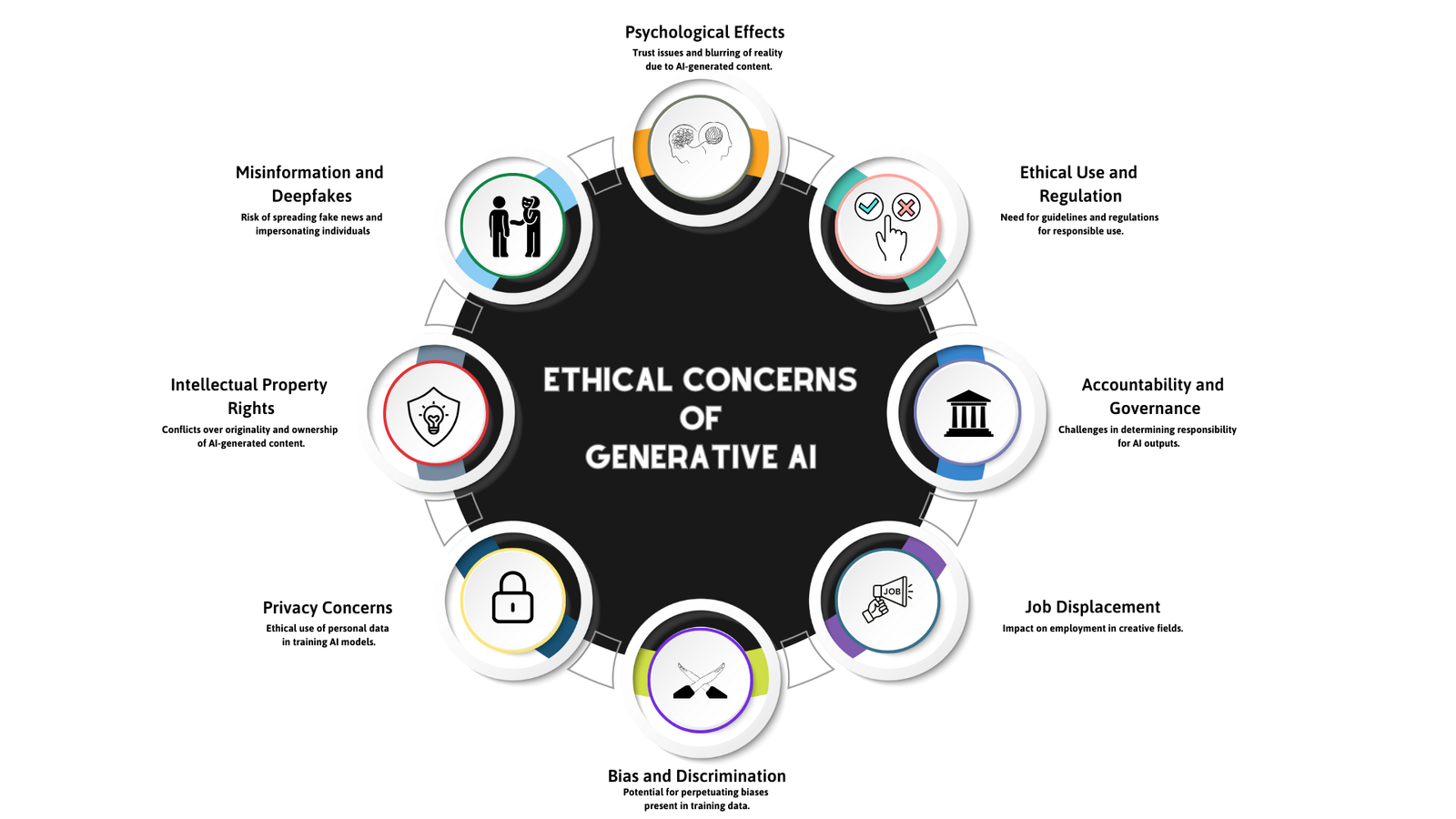

• Ethical Concerns of Generative AI:

The ethical concerns surrounding generative AI highlight the need for a balanced approach to its development and use. These concerns include:

Figure Shows about the Ethical Concerns of Generative Model

Addressing these concerns calls for a multidisciplinary effort, involving ethicists, technologists, policymakers, and the broader public, to steer the development of generative AI in a direction that maximizes its benefits while minimizing potential harms. As this technology continues to evolve, so must our strategies for managing its ethical implications.

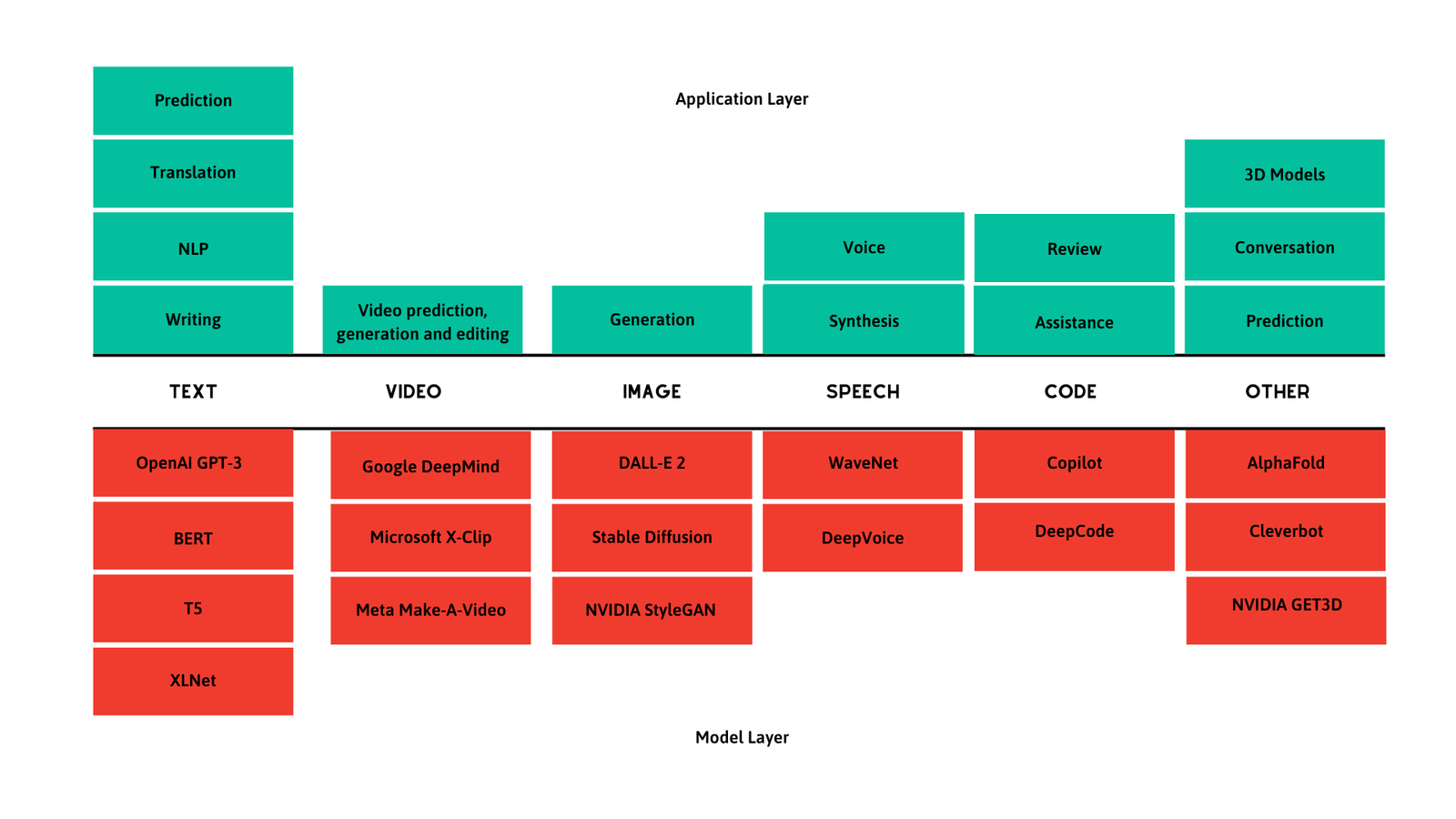

Current Market Landscape of Generative AI

Figure Shows about the Current Market Landscape of Generative Model

The current market landscape of generative AI is a vibrant and diverse field spanning several domains, each leveraging advanced AI models to revolutionize the way we interact with and create digital content. From text generation with models like OpenAI's GPT-3, BERT, T5, and XLNet enhancing prediction, translation, and natural language processing tasks, to video with Google DeepMind's innovations in video prediction, generation, and editing, the industry is pushing boundaries. Image generation sees DALL-E 2 and NVIDIA's StyleGAN creating striking visuals, while in speech, WaveNet and DeepVoice are synthesizing ever more realistic human speech. Code generation tools like Copilot are revolutionizing programming, and in other domains, AI models like AlphaFold are making groundbreaking predictions in fields as complex as biology. This dynamic and fast-evolving landscape is reshaping how we think about creativity and problem-solving across industries. The below shows the application and model applied at various sector of areas:

Future Scope

Over the next decade, generative AI is set to evolve through its collaboration with other cutting-edge technologies dramatically. One significant area is its integration with the Internet of Things (IoT) and Big Data. This synergy will enable generative AI to access vast and varied data sources, enhancing its ability to create more accurate and contextually relevant content. This could revolutionize areas like smart cities, where AI can generate solutions for traffic management, energy use, and public safety by analyzing real-time data from a network of sensors. Another promising area of collaboration is with blockchain technology. Blockchain's immutable ledger could provide a solution to the intellectual property concerns associated with generative AI. By recording the creation and modification of AI-generated content on a blockchain, it's possible to ensure the authenticity, ownership, and traceability of this content. This integration could foster trust and transparency in AI-generated media, art, and other digital assets, potentially opening new markets and business models centered around AI-created content.

The fusion of generative AI with augmented and virtual reality (AR/VR) technologies is also set to transform several sectors. In education and training, for instance, this combination could lead to the creation of highly immersive and personalized learning experiences. In entertainment and gaming, the integration could provide users with deeply engaging and ever-changing virtual worlds. Additionally, the potential intersection of generative AI with emerging fields like quantum computing could further amplify its capabilities, making it more efficient and powerful than ever before. This could lead to breakthroughs in complex problem-solving and the creation of content at a scale and speed currently unimaginable. In essence, the next decade will likely witness generative AI not just as a standalone technology, but as a pivotal component in a larger ecosystem of interconnected and complementary technologies, driving innovation and creating opportunities across diverse domains.

Conclusion

The transformative potential of Generative AI is undeniable, and as we strive to fully harness its capabilities, it is imperative that we uphold vigilance and responsibility in our endeavors. By persistently advancing in research and fostering collaborative efforts, we can unleash the complete potential of Generative AI, paving the way for unparalleled progress. It's important to note that Generative AI research evolves through practice, and initiatives like iNeuBytes AI Virtual Internship Program play a pivotal role in this evolution. Also read about the 7 AI job opportunities.